Make User Bugs Make Sense With Maya Logging

“I tried to export this file but it broke”

– Some animator

“Why won’t this animation work?”

– Another animator

“I apply a pose to this rig but it goes all weird :(“

– The same animator as the first quote, but they’re having a pretty bad day

As a pipeline TD, you may get these kinds of messages often. Equally often, the details are a bit hazy. This is not conducive to finding the exact line of code, the exact callback in the ui, that may be causing the issue. You may not even know what the issue specifically is. Writing good bug reports is difficult and time consuming, this is why we tend to hire QA testers. Regular users often can’t commit the same amount of time to it, so the reports can be lacking in detail.

Instead, help them help you. Ensure that your tools output to a log file that they can find easily, and send it along with any bug reports. But how can you get when happens in Maya’s command output window out into a file? This article details a few approaches I’ve taken and their pros/cons.

Approach 1: scriptEditorInfo()

scriptEditorInfo is a built-in maya command that allows you to echo all command output to a specified file. It’s usage is very simple:

from maya import cmds

def register_default_logging(filepath):

'''

Register logging using the default maya cmds command.

All script editor output will be passed to the given filepath.

'''

cmds.scriptEditorInfo(historyFilename=filepath)

Code language: Python (python)Now any print command you use in your tools will be diverted to a log file. Provided you print out enough detail about a user’s actions, you can track down bugs.

By using the environment variable MAYA_GUI_LOGGER_FORMAT you can also change how the messages in the command window get displayed. This follows the default syntax used for the logging.Formatter class in python. This is useful to add things like error level, module name, or a timestamp. Adding these adds noise to the command window though, so it may be trickier to parse while in normal use.

Another limitation is that this is a global setting. There may be other tools that rely on the scriptEditorInfo function, and scripts will steal the logging functionality from each other.

Approach 2: The logging module

If you need to log information in python, whether to a file, a terminal window, or an api endpoint, the logging module has you covered. There are many tutorials that explain what the logging module is and how to use it, so I won’t go too deep into it here. Suffice to say the code to set up logging to a file is also simple:

import logging

import os

def register_python_logging(filepath):

'''

Attach a file handler to the root logger pointing to a file of your choice.

Any Logger.log() calls that end up being handled by the root logger will now output to your file.

'''

handler = logging.FileHandler(filepath)

log_dir = os.path.dirname(filepath)

if not os.path.exists(log_dir):

os.makedirs(log_dir)

root_logger = logging.getLogger()

root_logger.setLevel(logging.DEBUG)

root_logger.addHandler(handler)

Code language: Python (python)Stick this somewhere in your pipeline’s boot procedure, and call the function with a filepath of your choosing. Just be aware that some modules may start being very noisy and you may need to manually shut them up (looking at you, pysideuic). Then, logging from your tools is super easy:

#in an example tool.py

import logging

log = logging.getLogger(__name__)

#...later down in the tool's functions

def export_file(export_file_path):

#an example export function with logging.

try:

log.info('Starting export')

log.debug(export_file_path)

#export code here

log.info('Export complete!')

except:

log.exception('Export failed!')

raise

Code language: Python (python)Since all log calls in submodules propagate up to the root logger all your log method calls will be written out by the FileHander you set up during the pipeline boot. No other boilerplate is needed in your modules apart from the 2 lines importing and getting your logger.

The logging module is massively configurable and extendable, the scope of which goes far beyond this article, but some good starting points would be https://www.toptal.com/python/in-depth-python-logging and https://realpython.com/python-logging/.

There is a problem with this approach though, in that it would only catch information emitted from your Logger instances. Things like print statements or output from OpenMaya.MGlobal.displayInfo or its variants will be missing from the file. Sometimes this output can hold crucial clues to a bug a user has.

Approach 3: Callbacks

So, after trying both we need a way to log fulfils the following conditions:

- Captures all messages sent to the command window

- Is independent of other plugins (or at least, more independent)

- Allows us to log to a file of our choosing

The answer lies in both the logging and OpenMaya modules.

First, we set up a logger which we can access globally. I did it via a module-level variable. We can also create a function for setting up the logger instead of doing it on module import:

FILE_LOGGER = None

LOGGER_CALLBACK_ID = None

CALLBACK_LOGGER_NAME = '_CALLBACK_LOGGER_'

def register_callback_logging(filepath):

'''

Register logging by hooking into the message sent when the command window is updated.

'''

global FILE_LOGGER

if not FILE_LOGGER:

FILE_LOGGER = logging.getLogger(CALLBACK_LOGGER_NAME)

handler = logging.FileHandler(filepath)

log_dir = os.path.dirname(filepath)

if not os.path.exists(log_dir):

os.makedirs(log_dir)

Code language: Python (python)It is here that you can also add a logging.Formatter instance to change what information gets added to the messages. It will apply only to the file, and your script editor will remain concise.

FILE_LOGGER = None

LOGGER_CALLBACK_ID = None

CALLBACK_LOGGER_NAME = '_CALLBACK_LOGGER_'

def register_callback_logging(filepath):

'''

Register logging by hooking into the message sent when the command window is updated.

'''

global FILE_LOGGER

if not FILE_LOGGER:

FILE_LOGGER = logging.getLogger(CALLBACK_LOGGER_NAME)

handler = logging.FileHandler(filepath)

log_dir = os.path.dirname(filepath)

if not os.path.exists(log_dir):

os.makedirs(log_dir)

FILE_LOGGER.propagate = False #prevents infinite recursion when logging out messages, since the root logger also makes calls to MGLobal.displayInfo et al.

global LOGGER_CALLBACK_ID

if not LOGGER_CALLBACK_ID:

LOGGER_CALLBACK_ID = OpenMaya.MCommandMessage.addCommandOutputCallback(on_command_output_callback)

Code language: Python (python)What addCommandOutputCallback is doing is registering the function on_command_output_callback to run every time something is output to the command window. You might be wondering what that Logger.propagate call does, we’ll get back to that in a second.

A word of warning: Do not register this callback before the Maya gui has been created! It will result in a fatal error. I’ve also not tested this in maya headless mode. If someone gets a chance to, please let me know how it turns out. To ensure that the callback is only registered while the gui is active, you can put the callback registration function in an evalDeferred call.

on_command_output_callback looks like this:

def on_command_output_callback(message, messageType, clientData=None):

'''

log out the message that gets output to the command window

'''

global FILE_LOGGER

if messageType in (OpenMaya.MCommandMessage.kHistory, OpenMaya.MCommandMessage.kDisplay, OpenMaya.MCommandMessage.kInfo, OpenMaya.MCommandMessage.kResult):

FILE_LOGGER.info(message)

elif messageType == OpenMaya.MCommandMessage.kWarning:

FILE_LOGGER.warning(message)

elif messageType == OpenMaya.MCommandMessage.kError:

FILE_LOGGER.error(message)

Code language: Python (python)As you can see, much like the logging module, command output has varying levels of log verbosity and severity. In the callback we can make a reasonable mapping from one log level to another, and output the message.

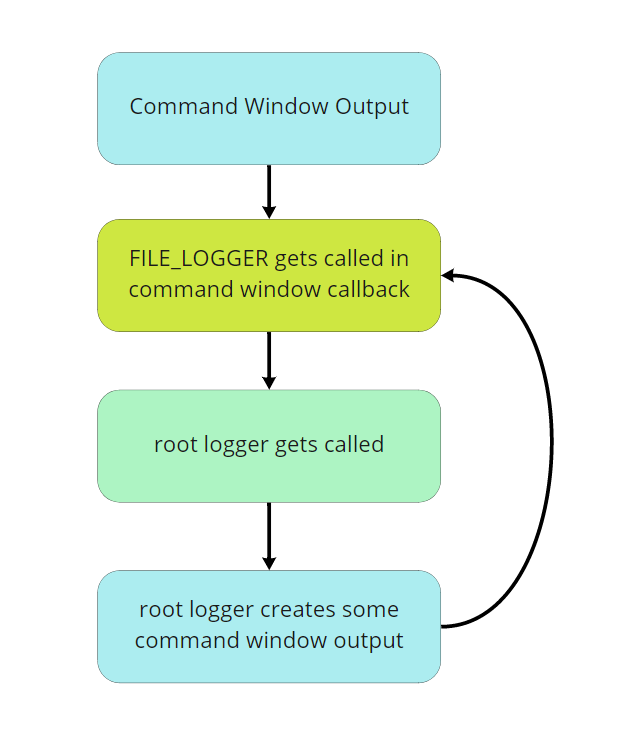

The Logger.propagate = False prevents infinite recursion. By default the logger we made will pass messages it emits up to the root logger as well, since it has handlers that Maya installs by default at startup. These handlers push output to the command window, which in turn calls the callback, which invokes the root logger… you get the picture. Setting this property to False will ensure that calls to the logger won’t reach the root logger, thus preventing the loop.

This is better, but there are still improvements that could be made. For instance running a reload on this module will clear the references to the logger and callback ID, it will leave us with a callback we can’t unregister easily. Not good.

Some may take issue with the reliance on a global variable for the logger. Internally in the logging module, the logger is a globally accessible singleton anyway though, so for simplicity’s sake I didn’t try to obscure it via some clientData dependency injection trickery. In this implementation it would get recreated with a reload though, which may or may not cause issues.

A dictionary using the various MCommandMessage levels with some logger methods as values might better than an if .. elif chain, but that would need profiling.

Ultimately, how you approach this problem is going to depend on your use case and constraints. These are just ways that I’ve found useful in the past. If you’d like to share your own approaches, correct something in this article, or just say hi, hit me up on twitter.

Stay safe, and happy scripting!